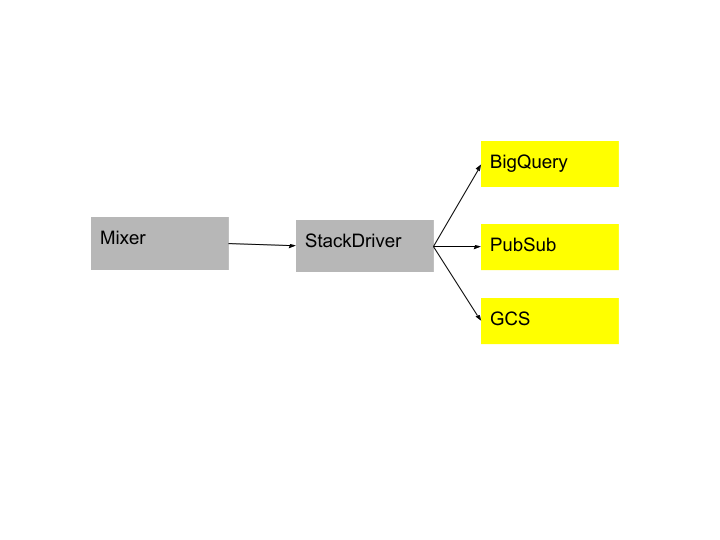

Exporting Logs to BigQuery, GCS, Pub/Sub through Stackdriver

This post shows how to direct Istio logs to Stackdriver and export those logs to various configured sinks such as such as BigQuery, Google Cloud Storage or Cloud Pub/Sub. At the end of this post you can perform analytics on Istio data from your favorite places such as BigQuery, GCS or Cloud Pub/Sub.

The Bookinfo sample application is used as the example application throughout this task.

Before you begin

Install Istio in your cluster and deploy an application.

Configuring Istio to export logs

Istio exports logs using the logentry template.

This specifies all the variables that are available for analysis. It

contains information like source service, destination service, auth

metrics (coming..) among others. Following is a diagram of the pipeline:

Istio supports exporting logs to Stackdriver which can in turn be configured to export logs to your favorite sink like BigQuery, Pub/Sub or GCS. Please follow the steps below to setup your favorite sink for exporting logs first and then Stackdriver in Istio.

Setting up various log sinks

Common setup for all sinks:

- Enable Stackdriver Monitoring API for the project.

- Make sure

principalEmailthat would be setting up the sink has write access to the project and Logging Admin role permissions. - Make sure the

GOOGLE_APPLICATION_CREDENTIALSenvironment variable is set. Please follow instructions here to set it up.

BigQuery

- Create a BigQuery dataset as a destination for the logs export.

- Record the ID of the dataset. It will be needed to configure the Stackdriver handler.

It would be of the form

bigquery.googleapis.com/projects/[PROJECT_ID]/datasets/[DATASET_ID] - Give sink’s writer identity:

cloud-logs@system.gserviceaccount.comBigQuery Data Editor role in IAM. - If using Google Kubernetes Engine, make sure

bigqueryScope is enabled on the cluster.

Google Cloud Storage (GCS)

- Create a GCS bucket where you would like logs to get exported in GCS.

- Recode the ID of the bucket. It will be needed to configure Stackdriver.

It would be of the form

storage.googleapis.com/[BUCKET_ID] - Give sink’s writer identity:

cloud-logs@system.gserviceaccount.comStorage Object Creator role in IAM.

Google Cloud Pub/Sub

- Create a topic where you would like logs to get exported in Google Cloud Pub/Sub.

- Recode the ID of the topic. It will be needed to configure Stackdriver.

It would be of the form

pubsub.googleapis.com/projects/[PROJECT_ID]/topics/[TOPIC_ID] - Give sink’s writer identity:

cloud-logs@system.gserviceaccount.comPub/Sub Publisher role in IAM. - If using Google Kubernetes Engine, make sure

pubsubScope is enabled on the cluster.

Setting up Stackdriver

A Stackdriver handler must be created to export data to Stackdriver. The configuration for a Stackdriver handler is described here.

Save the following yaml file as

stackdriver.yaml. Replace<project_id>, <sink_id>, <sink_destination>, <log_filter>with their specific values.apiVersion: "config.istio.io/v1alpha2" kind: stackdriver metadata: name: handler namespace: istio-system spec: # We'll use the default value from the adapter, once per minute, so we don't need to supply a value. # pushInterval: 1m # Must be supplied for the Stackdriver adapter to work project_id: "<project_id>" # One of the following must be set; the preferred method is `appCredentials`, which corresponds to # Google Application Default Credentials. # If none is provided we default to app credentials. # appCredentials: # apiKey: # serviceAccountPath: # Describes how to map Istio logs into Stackdriver. logInfo: accesslog.logentry.istio-system: payloadTemplate: '{{or (.sourceIp) "-"}} - {{or (.sourceUser) "-"}} [{{or (.timestamp.Format "02/Jan/2006:15:04:05 -0700") "-"}}] "{{or (.method) "-"}} {{or (.url) "-"}} {{or (.protocol) "-"}}" {{or (.responseCode) "-"}} {{or (.responseSize) "-"}}' httpMapping: url: url status: responseCode requestSize: requestSize responseSize: responseSize latency: latency localIp: sourceIp remoteIp: destinationIp method: method userAgent: userAgent referer: referer labelNames: - sourceIp - destinationIp - sourceService - sourceUser - sourceNamespace - destinationIp - destinationService - destinationNamespace - apiClaims - apiKey - protocol - method - url - responseCode - responseSize - requestSize - latency - connectionMtls - userAgent - responseTimestamp - receivedBytes - sentBytes - referer sinkInfo: id: '<sink_id>' destination: '<sink_destination>' filter: '<log_filter>' --- apiVersion: "config.istio.io/v1alpha2" kind: rule metadata: name: stackdriver namespace: istio-system spec: match: "true" # If omitted match is true. actions: - handler: handler.stackdriver instances: - accesslog.logentry ---Push the configuration

$ kubectl apply -f stackdriver.yaml stackdriver "handler" created rule "stackdriver" created logentry "stackdriverglobalmr" created metric "stackdriverrequestcount" created metric "stackdriverrequestduration" created metric "stackdriverrequestsize" created metric "stackdriverresponsesize" createdSend traffic to the sample application.

For the Bookinfo sample, visit

http://$GATEWAY_URL/productpagein your web browser or issue the following command:$ curl http://$GATEWAY_URL/productpageVerify that logs are flowing through Stackdriver to the configured sink.

- Stackdriver: Navigate to the Stackdriver Logs Viewer for your project and look under “GKE Container” -> “Cluster Name” -> “Namespace Id” for Istio Access logs.

- BigQuery: Navigate to the BigQuery

Interface for your project and you

should find a table with prefix

accesslog_logentry_istioin your sink dataset. - GCS: Navigate to the Storage

Browser for your

project and you should find a bucket named

accesslog.logentry.istio-systemin your sink bucket. - Pub/Sub: Navigate to the Pub/Sub

Topic List for

your project and you should find a topic for

accesslogin your sink topic.

Understanding what happened

Stackdriver.yaml file above configured Istio to send access logs to

Stackdriver and then added a sink configuration where these logs could be

exported. In detail as follows:

Added a handler of kind

stackdriverapiVersion: "config.istio.io/v1alpha2" kind: stackdriver metadata: name: handler namespace: <your defined namespace>Added

logInfoin specspec: logInfo: accesslog.logentry.istio-system: labelNames: - sourceIp - destinationIp ... ... sinkInfo: id: '<sink_id>' destination: '<sink_destination>' filter: '<log_filter>'In the above configuration sinkInfo contains information about the sink where you want the logs to get exported to. For more information on how this gets filled for different sinks please refer here.

Added a rule for Stackdriver

apiVersion: "config.istio.io/v1alpha2" kind: rule metadata: name: stackdriver namespace: istio-system spec: match: "true" # If omitted match is true actions: - handler: handler.stackdriver instances: - accesslog.logentry

Cleanup

Remove the new Stackdriver configuration:

$ kubectl delete -f stackdriver.yamlIf you are not planning to explore any follow-on tasks, refer to the Bookinfo cleanup instructions to shutdown the application.

Availability of logs in export sinks

Export to BigQuery is within minutes (we see it to be almost instant), GCS can have a delay of 2 to 12 hours and Pub/Sub is almost immediately.